As part of the Stereo Theatre project, i3Dr is integrating our high-resolution stereo camera system and propriety stereo matching algorithm into the Unity game engine.

This will allow us to render detailed 3D information of a patient in an operating theatre in real-time for use in virtual reality (VR).

Unity is a games engine and development platform widely used by industry. The cross-platform versatility makes it ideal for running on many different systems and quickly building for different architectures. Unity is designed for real-time systems and provides fast simulation of physics and light. Unity has support for a wide range of VR devices using the XR management tools and 3rdparty plugins provided by headset manufactures. This project is using Unity to display 3D data captured from I3DR’s stereo cameras and display it to a user in VR. This is a non-trivial task due to the high density of data required to be rendered without dramatically effecting the comfort experience of the user due to low frames per second (FPS). This is particularly important for VR applications as lag in the display of the environment can cause motion sickness in users.

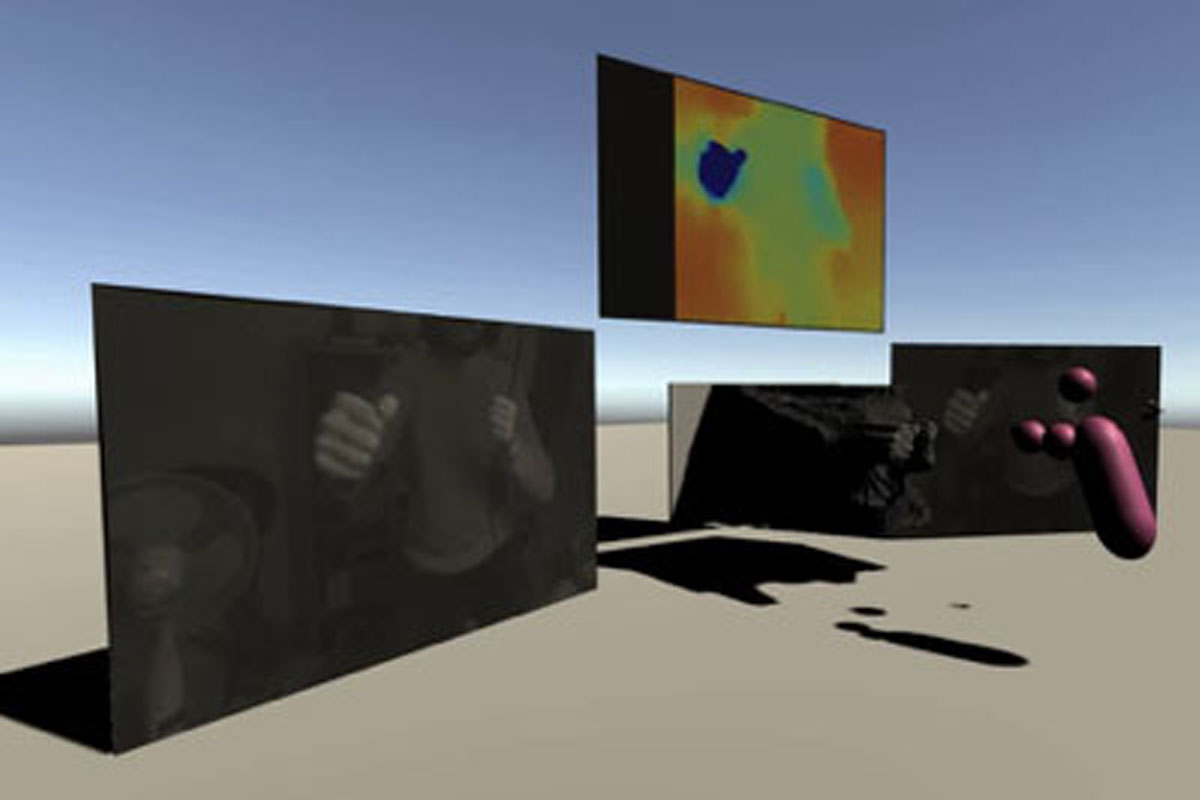

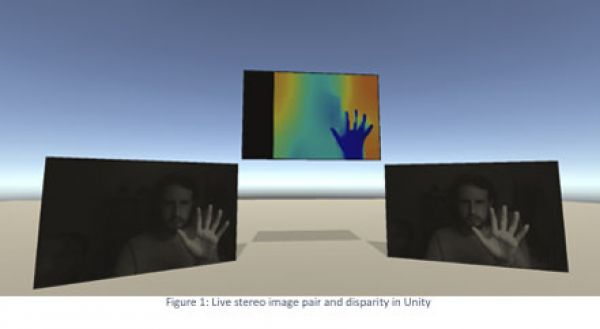

To generate the 3D information for use in Unity, first the cameras must be integrated into the platform. Currently, i3Dr’s Deimos stereo camera has been integrated and is able to display the live feed from the camera in a scene along with the disparity image. This disparity image is a visual representation of the depth of objects from the camera where close objects are represented as blue and far away objects as red. This colour scale is used as humans are much better at distinguishing the difference between colours than the intensity of them.

See Figure 1 for an example of this disparity image in a games environment. The next stage will be to integrate our higher resolution stereo system, Phobos, into Unity – this stage will be coming very soon.

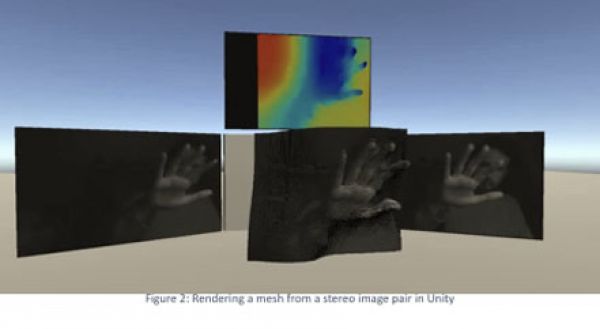

To render this depth image as real 3D data, this data needed to be converted into a mesh. A mesh is a solid surface or object that has volume. This could be a simple cube or a complex model of a hand. In this project, the mesh represents how close object is to the camera. This is calculated on a pixel by pixel basis which results in the mesh demonstrated in Figure 2. This can be rendered at 30fps with the mesh representing nearly a million points of depth data.

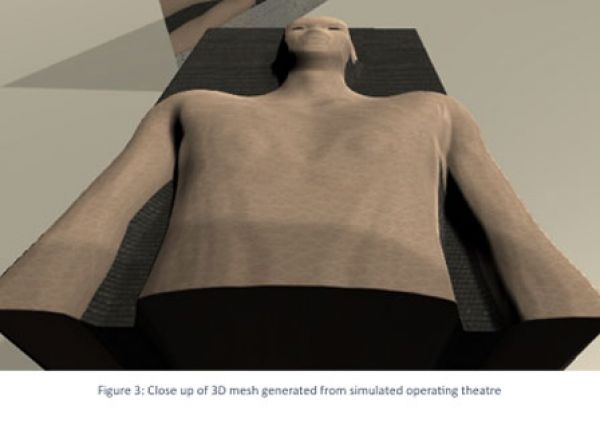

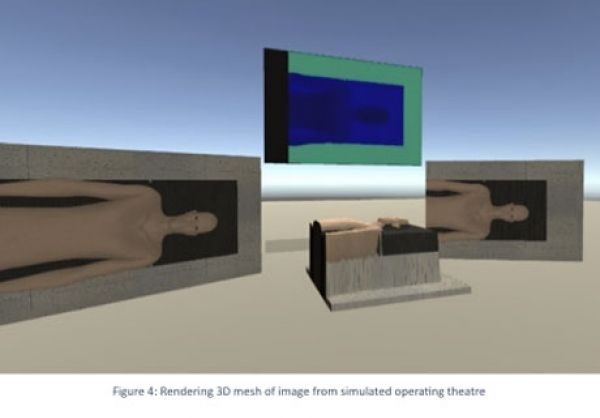

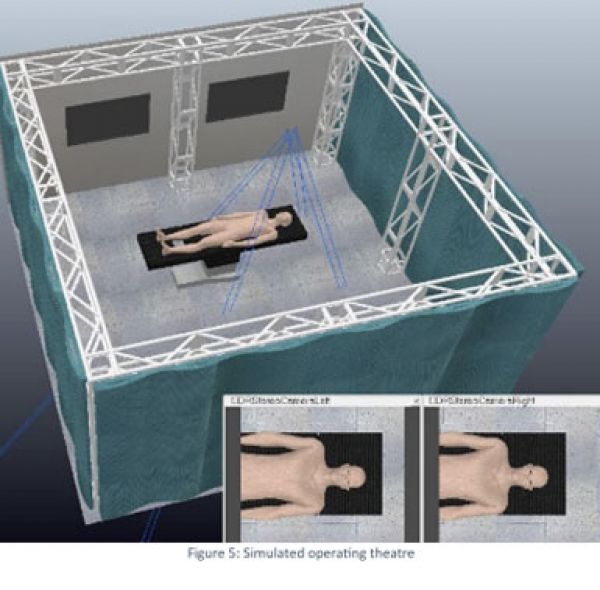

The goal of this project was to represent the 3D data of a patient, so this approach was also applied to simulated images captured from a virtual operating theatre designed earlier in the project. In this simulated environment, we were able to replicate what a stereo camera would see. Figure 3 shows this simulated scene and the images captured from the simulated camera. Using these images in the Unity 3D rendering pipeline developed by i3Dr, a representation of the patient was generated. Figure 5 shows the rendered environment and Figure 4 shows an up-close view of the 3D mesh generated from the simulated images. By giving detailed depth information allowing for much greater insight, this gives a much clearer result for a user to understand the scene than a traditional image would provide.

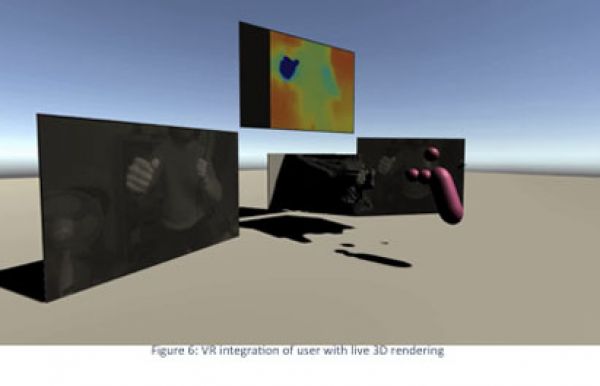

After some additional development, these scenes are also viewable in a VR headset. An Oculus Quest was used to interact with the environment. A test application was developed that allowed the user to interact with 3D rendering of themselves using a live feed from Deimos. This is demonstrated in Figure 6. The pink spheres represent the VR player in the environment and the mesh can be seen in the centre of the image, matching their movements.

A video demonstration of this application can be found here: https://youtu.be/UPL_IBlW43E

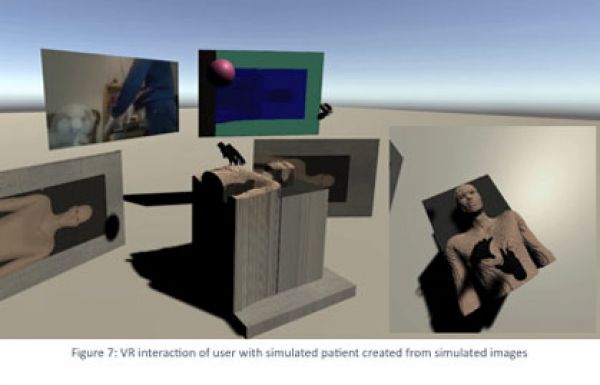

A second test application was developed to represent the simulated operating theatre. This is an early prototype that clearly shows the potential of the system that this project aims to create. Figure 7 shows a user interacting with the 3D rendering of the simulated images of a patient.

A webcam image was streamed into the environment to show the real-world user can perform actions in the virtual environment from their own bedroom. Using the Oculus Quest’s hand tracking feature, interaction is very natural. The smaller image shows the perspective of the user from the VR headset as they use their hands to interact with the 3D rendering.

A video demonstration of this application can be found here: https://youtu.be/HleasQEtEpY

As progress continues on this project, there will soon be additional developments to improve the 3D matching by using i3Dr’s proprietary stereo matching algorithm for dense 3D results. Also, compatibility for our high-resolution stereo camera systems is in development to allow for highly detailed image capture to produce detailed 3D results.